DS-Bridge: AI-Enabled Workflow

60-Second Summary (TL;DR)

The outcome: Reduced the path from product idea to production-ready UI from months to days by replacing static design documentation with a shared, executable system.

Why it mattered: The organization needed proof that design systems and AI-assisted workflows could scale securely and deliver real value beyond Figma artifacts.

What I did: Designed and validated an AI-enabled operating model that lets non-technical designers plan, build, validate, and document real components directly in code.

Proof: A junior designer with no coding background shipped a production component in under a week; the system produced 5 components, 95 tests, and a live documentation site adopted as the source of truth.

Timeframe: December 2025, 2 weeks from idea to full site

External POC Link: DS-Bridge

Overview

Primary Impact:

Turned months of design–development handoff into days by letting designers validate production-ready UI directly in code.

Secondary Impacts:

- Replaced static Figma documentation with a living, executable source of truth usable by designers, engineers, and product partners.

- Enabled non-technical contributors to safely build and validate real components directly in the codebase.

- Shifted engineering effort from manual translation to high-leverage refinement.

Role: AI / Systems-Focused Designer (Principal-level scope)

Scope: Company-wide whitelabel design system; design, documentation, workflow automation, and engineering integration.

Why This Is Hard: Required aligning human workflows, AI behavior, and enterprise security constraints while proving real production value, not a demo.

Why Leadership Needed Proof

This work originated from a VP of Product mandate to evaluate whether the company should continue investing in a whitelabel design system at scale. Leadership needed more than static design artifacts to justify further funding and adoption

Prior efforts relied on Figma-based components and written guidelines, which:

- Failed to drive consistent usage outside the design team

- Did not give engineering or product leaders confidence in real-world feasibility

- Required ongoing manual maintenance with unclear ownership

The core leadership question was not “can we design these components,” but:

- Can this system reduce time-to-market?

- Can it scale across teams without increasing headcount?

- Can it operate securely within enterprise AI constraints?

A traditional proof of concept would have been insufficient

- Static mocks would not surface technical constraints

- Documentation alone would not demonstrate adoption or workflow change

What leadership needed was proof of an operating model, not a visual demo:

- Evidence that designers, engineers, and product partners could work closer together

- Validation that AI-assisted workflows could produce production-ready output

- Confidence that this approach could become a long-term system of record

The Real Problem (Not the Obvious One)

What leadership thought the problem was:

- Create a small set of static whitelabel components in Figma

- Show visual examples of how other brands might be supported

- Prove concept viability at a surface level

What was missing from that framing:

- No consideration for how the system would scale

- No plan for long-term maintenance or contribution

- No clear ownership model

- No assessment of time, cost, or operational overhead

- No exploration of how AI workflows could reduce effort or improve quality

The real problem I identified:

- Design systems fail not at creation, but at contribution and maintenance

- Small updates accumulate hidden cost when every change requires manual Figma work and parallel documentation

- A decentralized design system spread across multiple Figma files had no single, reliable source of truth

What would have broken if nothing changed:

- Whitelabel components would quickly drift out of sync

- Files would continue to grow bloated and underused

- Designers would absorb ongoing manual maintenance work

- Engineers and product partners would still lack a clear, shared place to reference the system

The actual need:

- A way to rapidly scale a design system that is easy to maintain

- One URL, one system, one source of truth

- A workflow that makes small changes cheap at scale

Operating Model We Proved

Strategy: Treat design and development as a continuous, shared feedback loop where real code is the validation layer, not the final step

Mental model:

- Figma is a collaborative exploration tool, not the source of truth

- Code is where interaction quality, edge cases, and constraints are validated

- AI acts as an accelerator and guardrail, not a replacement for judgment

What this replaced:

- Treating Figma as the final artifact handed off to engineering

- Writing extensive documentation before behavior was validated

- Relying on engineers to surface interaction issues late in the process

What changes immediately when adopted:

- Designers approach Figma with implementation efficiency in mind

- Components are validated through interaction, not assumption

- Design, product, and engineering align earlier around what will actually ship

The 3 Decisions That Drove the Outcome

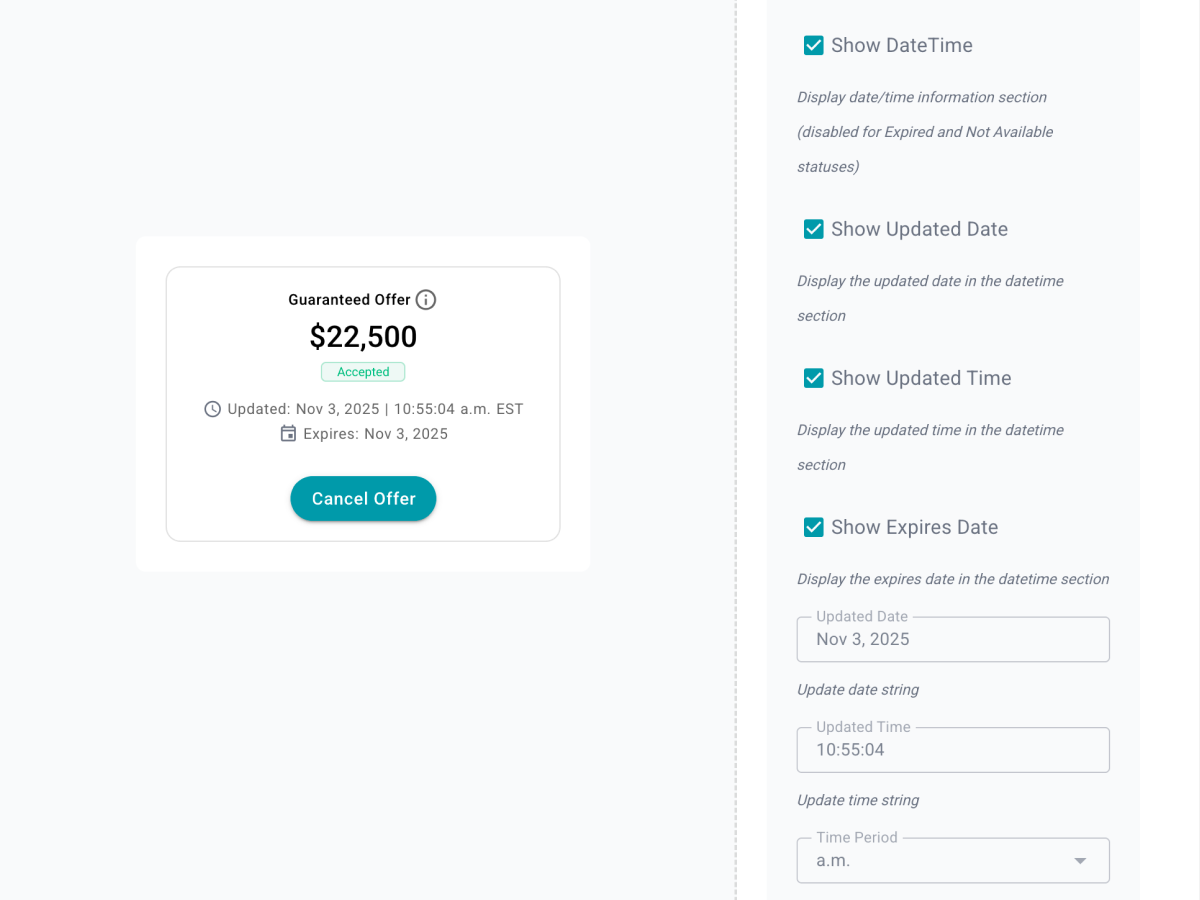

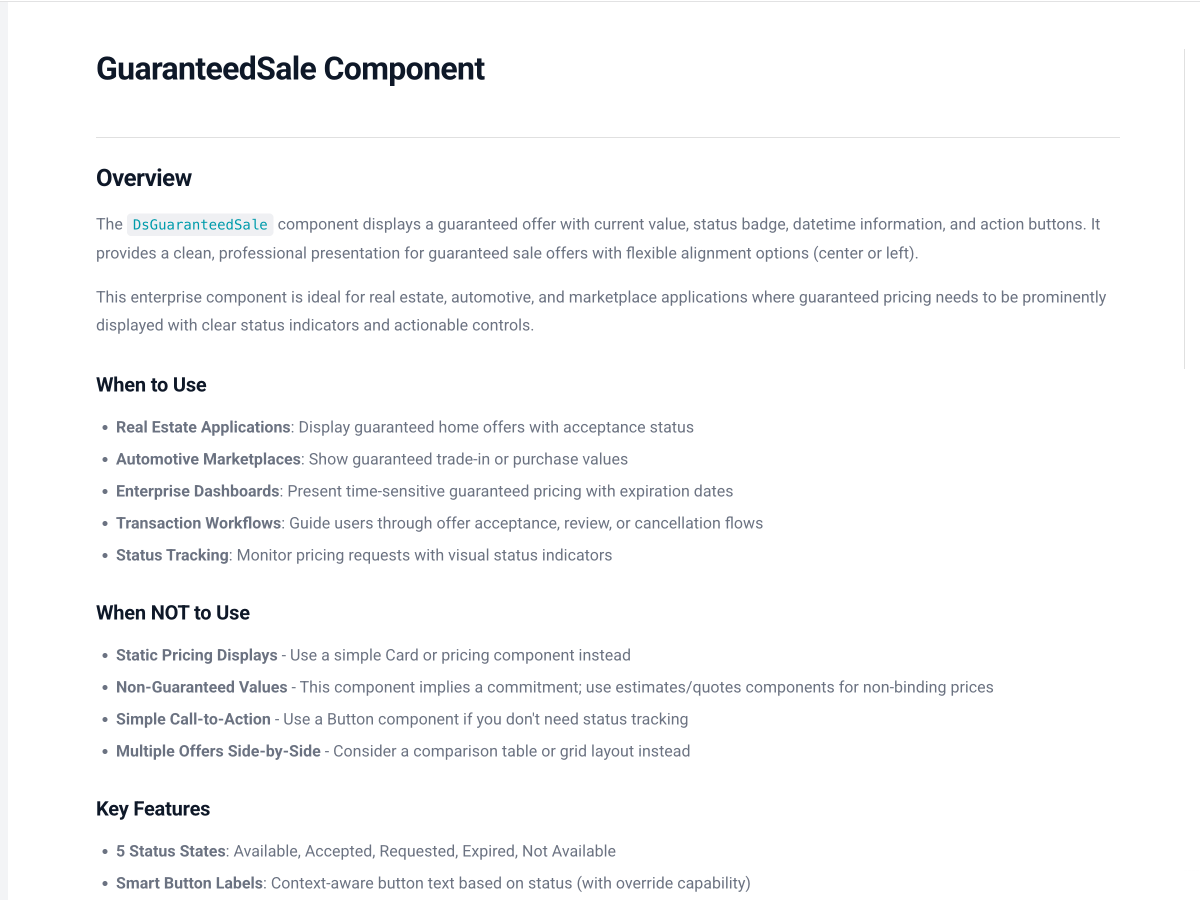

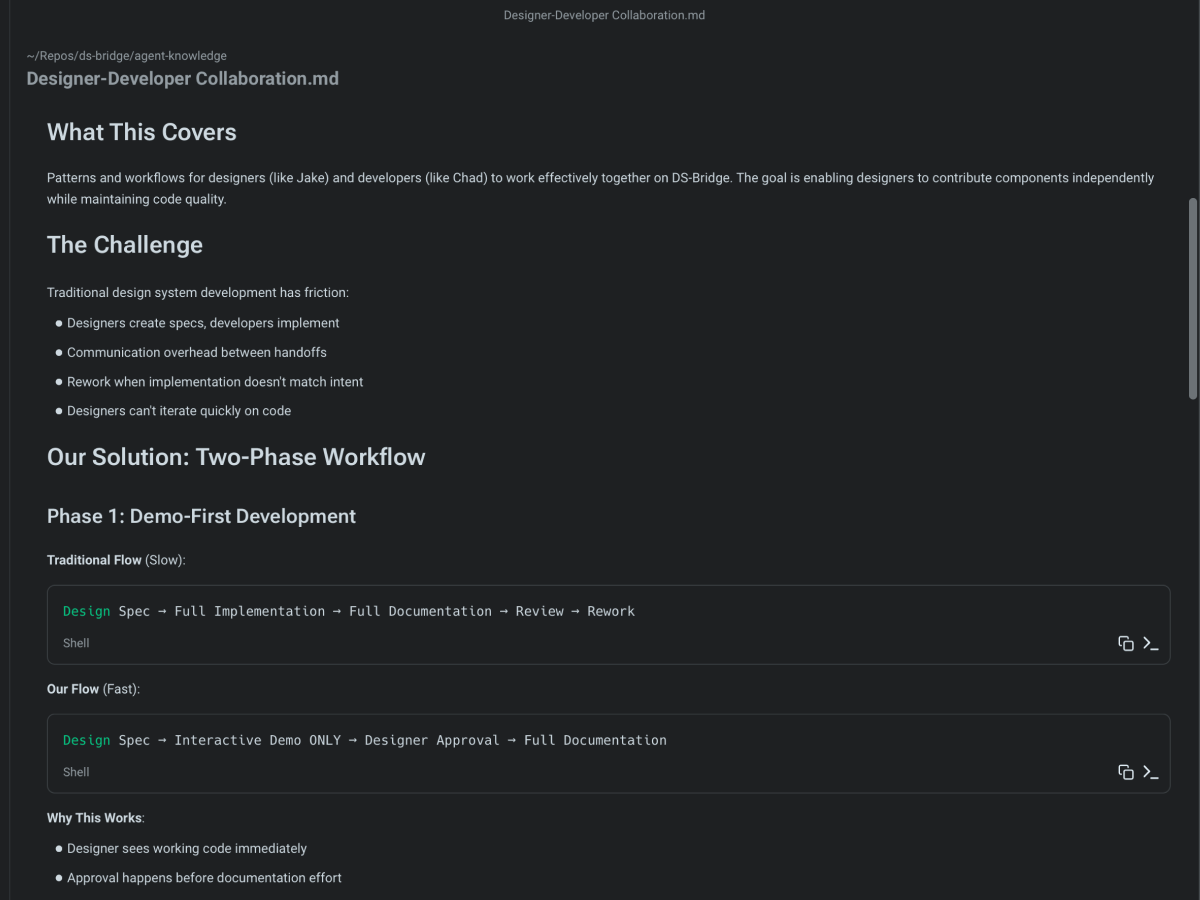

Decision 1: Component Demo-First, Two-Phase Workflow

Problem it solved: Designers and AI agents were attempting to refine component behavior and documentation simultaneously, which led to redundant work, bloated AI context, and lower-quality results

Options considered: Build component and documentation together in a single pass. Fully automate component and documentation generation. Separate component validation from documentation

Tradeoff accepted: Slower initial documentation in exchange for higher-quality components and dramatically less rework

Decision: Enforced a component demo-first workflow where designers:

- Push a real component into the codebase

- Interact with it directly to validate all states, properties, and edge cases

- Iterate between Figma and code until behavior is correct

- Enter documentation only after the component is validated

Why this worked:

- Reduced AI context window size, improving output quality

- Prevented designers from trying to solve too much at once

- Lowered cognitive load for non-technical designers

- Ensured documentation reflected real, validated behavior

Proof:

- Fewer rework cycles per component

- Faster convergence on correct behavior

- Cleaner, more accurate documentation

Decision 2: Structured Session Planning and Command-Based Guardrails

Problem it solved: Non-technical designers could easily become disoriented in the terminal, jump between tasks, or give the AI unscoped instructions that led to inefficiency, confusion, and low-quality output

Options considered: Allow free-form prompting and trust designers to self-organize. Create heavy documentation or training upfront. Encode structure directly into the workflow.

Tradeoff accepted: Reduced flexibility in exchange for clarity, focus, and repeatability

Decision: Introduced structured session planning and designer-friendly commands that:

- Forced agreement on scope before execution

- Guided designers through a predictable sequence of actions

- Automatically captured progress, decisions, and outcomes

Why this worked:

- Prevented designers from jumping around or over-scoping work

- Taught systems thinking implicitly through repeated use

- Rewarded focus with visible progress and higher-quality results

- Made contribution feel safe and approachable for non-technical users

Proof:

- Designers stayed focused within sessions

- Reduced need for corrective prompting

- Increased confidence and willingness to contribute over time

Decision 3: Let a Junior, Non-Technical Designer Own a Real Component

Problem it solved: A senior-led proof would not demonstrate scalability or cultural change; leadership needed evidence that the system worked for people without systems thinking or coding experience

Options considered: Use myself as the primary operator of the system. Pair closely with a designer and guide each step. Give a junior designer real ownership and step back.

Tradeoff accepted: Higher risk of failure in exchange for stronger proof of scalability and enablement

Decision: Chose a junior designer with no coding background and limited systems exposure and:

- Taught only the minimal set of commands needed to operate the workflow

- Explained where to view live output

- Intentionally avoided intervening in debugging, refinement, or problem-solving

Why this worked:

- Forced the system to be self-explanatory and resilient

- Created true ownership instead of dependency

- Enabled the designer to build confidence through independent problem-solving

- Revealed whether the workflow could teach systems thinking implicitly

Proof:

- The designer independently shipped and documented a real component

- Continued working on the system without supervision

- Demonstrated leadership and initiative beyond their role

Evidence and Validation

Cycle-time compression:

- Reduced idea-to-interactive validation from weeks or months to days

- Enabled same-week validation of component behavior in real code

Production proof:

- 5 whitelabel-ready components shipped

- 95 automated tests with full TypeScript coverage

- Live documentation site used as the system of record

Behavioral signals:

- A junior designer independently planned, built, debugged, and documented a component

- Continued contribution without supervision or intervention

- Increased designer confidence and ownership over the system

What would not have happened otherwise:

- Designers would not have validated interaction quality in real code

- Engineers would still translate intent instead of refining solutions

- Leadership would lack confidence in scalability beyond Figma artifacts

Constraints That Shaped the Work

Security and compliance:

- All AI workflows, tooling, and integrations required approval from internal AI engineering

- No external data leakage or unapproved tooling allowed

Organizational timing:

- Leadership availability was limited during execution

- Required proactive alignment with product owners and engineering partners

Human constraints:

- Designers had no prior terminal or coding experience

- The system needed to feel safe, guided, and reversible

Reflection (Forward-Looking)

Capability unlocked:

- Designing shared operating models where design and development converge in real code

How this changed my approach:

- I now prioritize workflow and system design over isolated deliverables

- I design for independence, ownership, and scale rather than supervision

What I look for next:

- Problems where AI can remove friction between disciplines

- Opportunities to make validation faster and decision-making cheaper

Appendix (Optional)

- Supporting artifacts

- Deep technical details

- Additional metrics or timelines

- Link to work / URL